This process is repeated recursively until the decision tree is fully grown.įor example, if using Gini impurity, data records are recursively split into two groups such that the weighted average impurity of the resulting groups is minimized. Gini impurity, information gain, mean squared error (MSE), among others.

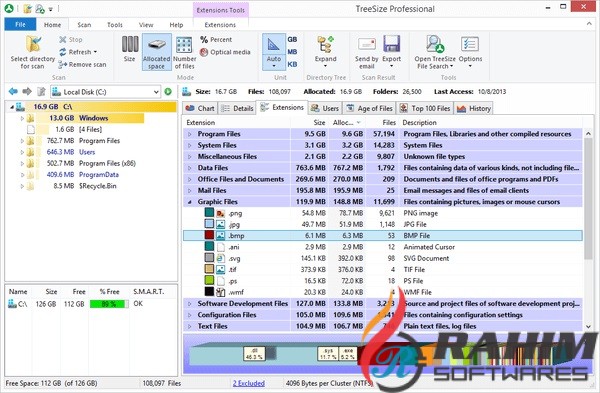

Here “ gain” is determined by the split criterion, which can be based on a few different quantities, e.g. In the case of decision trees, the Greedy Search determines the gain from each possible splitting option and then chooses the one that provides the greatest gain. ( I give an intuition for greedy search in a previous article on causal discovery. This is a popular technique in optimization, where we simplify a more complicated optimization problem by finding locally optimal solutions instead of globally optimal ones. Training requires a training dataset consisting of predictor variables pre-labeled with target values.Ī standard strategy for training a decision tree uses something called Greedy Search. In such cases, we can use machine learning strategies to learn the “best” decision tree for a given dataset.ĭata can be used to grow decision trees in an optimization process called training. However, it may not be easy to draw out an appropriate decision tree by hand using data.

#Treesize free download how to

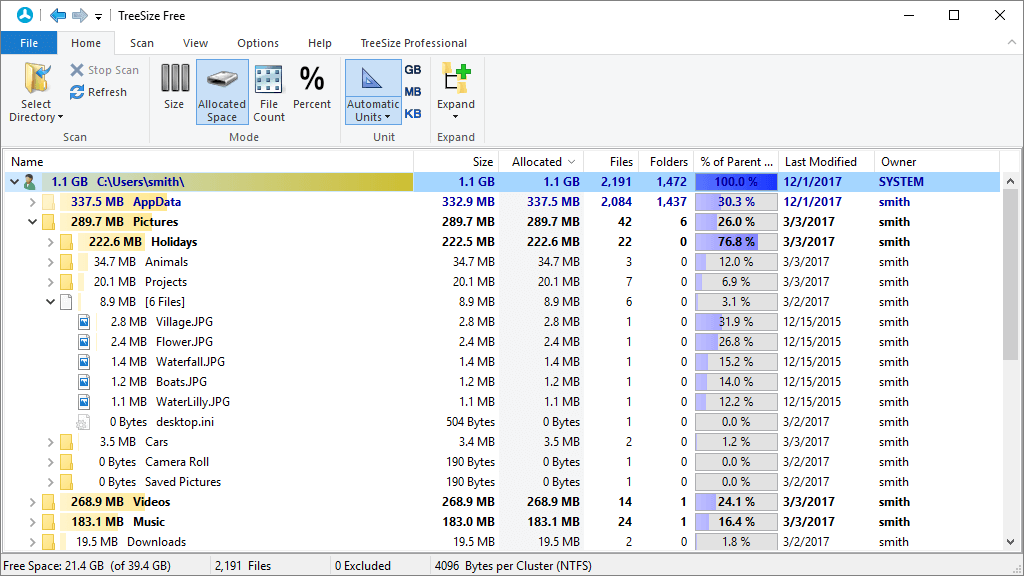

How to Grow a Decision Tree?ĭecision trees are an intuitive way to partition data. Graphical view of decision tree predictions for tea or coffee example. Then using the decision tree above, we can assign an appropriate caffeinated beverage to each record (green column). Here we have tabular data with two variables: time of day and hours of sleep from the previous night (blue columns). All we have to do is give the computer the data it needs in the form of a table.Īn example of this is shown below. Rather, have a computer evaluate data for us. looking at a decision tree and following along for a particular data record). In practice, we often don’t use decision trees like we did just now (i.e.

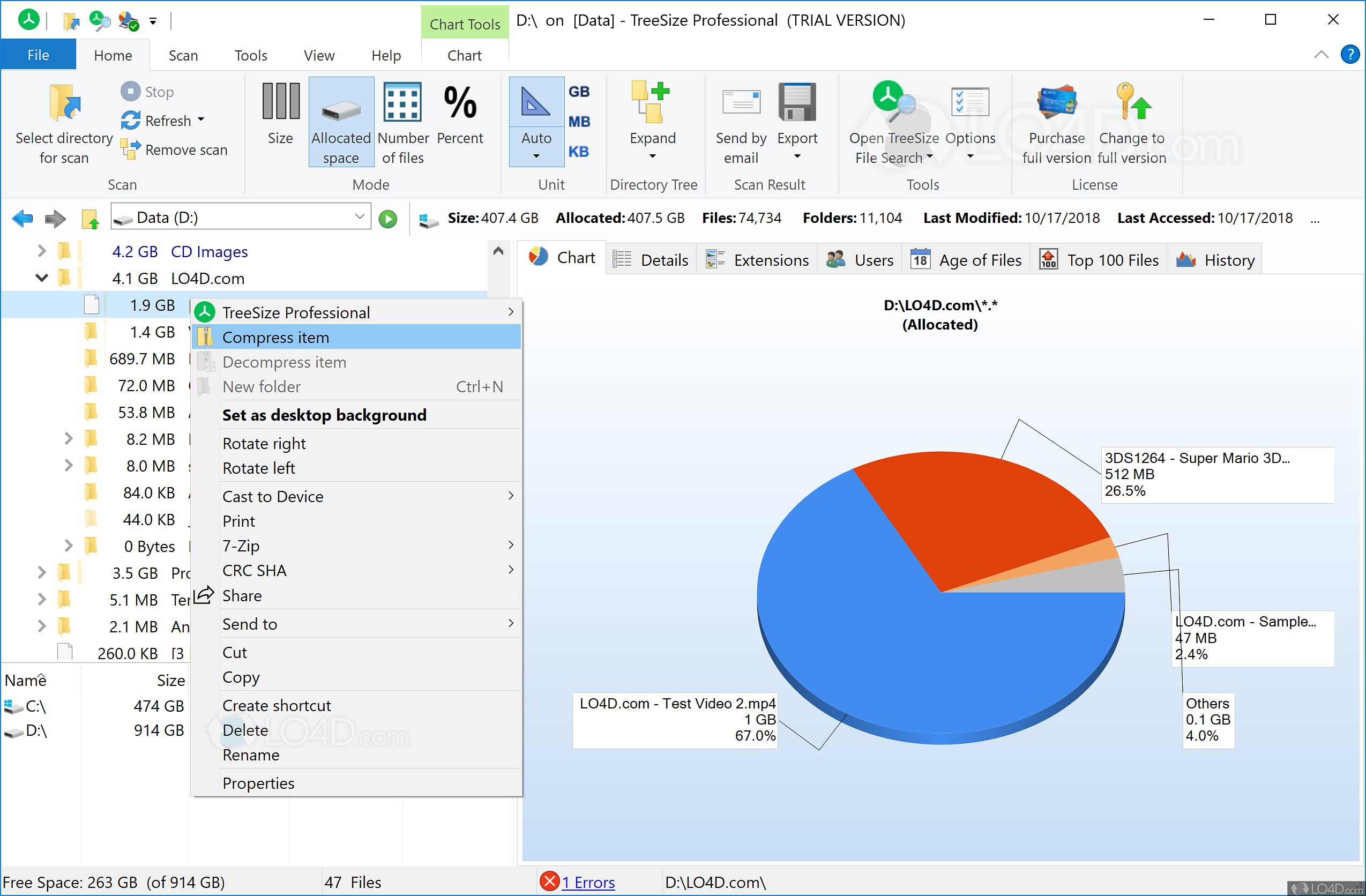

If yes, we go with tea again, but if no, we go with coffee ☕️. From here, we evaluate whether the hours of sleep from last night were more than 6 hours. These nodes further split data records based on conditional statements. In this case, we go with tea over coffee so we can get to bed at a reasonable hour.Ĭonversely, if it is 4 PM or earlier, we follow the right branch and end up at a so-called splitting node. No further splits are required to determine the outcome at this type of node. If yes, we follow the left branch and end up at a leaf node (also called the terminal node). Each possible response (yes or no) follows a different path in our tree. Here we evaluate whether it is after 4 PM or not. Each node in a decision tree corresponds to a conditional statement based on a predictor variable.Īt the top of the decision tree shown above is the root node, which sets the initial splitting of data records. Image by author.Īs shown in the above figure, a decision tree consists of nodes connected by directed edges. Example decision tree to predict whether I will drink tea or coffee.

0 kommentar(er)

0 kommentar(er)